- Resources /

- All /

- Infographic /

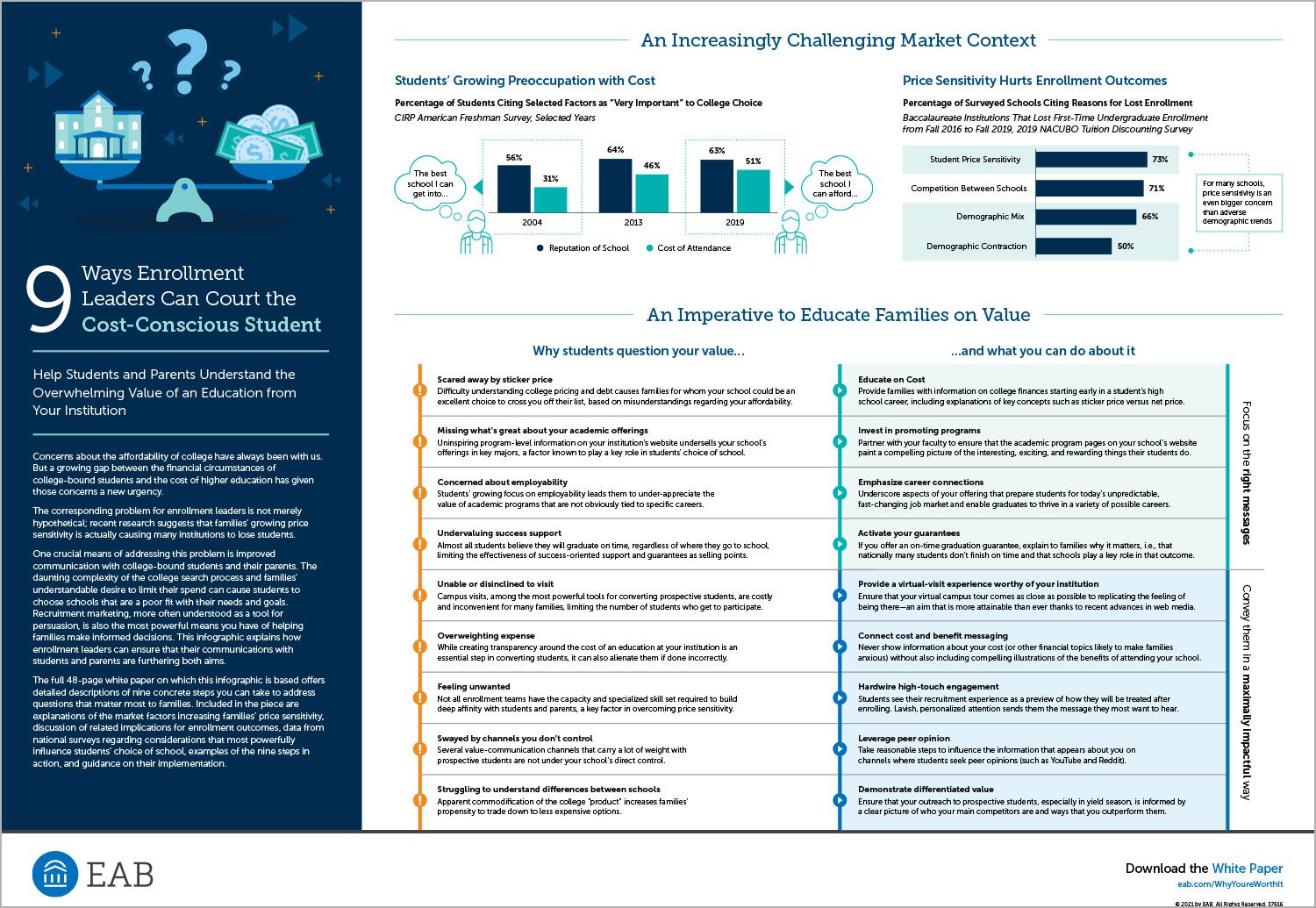

9 Ways Enrollment Leaders Can Court the Cost-Conscious Student

Concerns about the affordability of college have always been with us. But a growing gap between the financial circumstances of college-bound students and the cost of higher education has given those concerns a new urgency.

The corresponding problem for enrollment leaders is not merely hypothetical; recent research suggests that families’ growing price sensitivity is actually causing many institutions to lose students.

One crucial means of addressing this problem is improved communication with college-bound students and their parents. The daunting complexity of the college search process and families’ understandable desire to limit their spend can cause students to choose schools that are a poor fit with their needs and goals.

Recruitment marketing, more often understood as a tool for persuasion, is also the most powerful means you have of helping families make informed decisions. This infographic explains how enrollment leaders can ensure that their communications with students and parents are furthering both aims.

The full 48-page white paper on which this infographic is based offers detailed descriptions of nine concrete steps you can take to address questions that matter most to families. Included in the piece are explanations of the market factors increasing families’ price sensitivity, discussion of related implications for enrollment outcomes, data from national surveys regarding considerations that most powerfully influence students’ choice of school, examples of the nine steps in action, and guidance on their implementation.

More Resources

Help prospective students complete enrollment with email nudges

How to capture stealth prospect applicants